Embodied Visual Learning Robots anticipating the unseen and unheard

Embodied Visual Learning

Robots anticipating the unseen and unheard

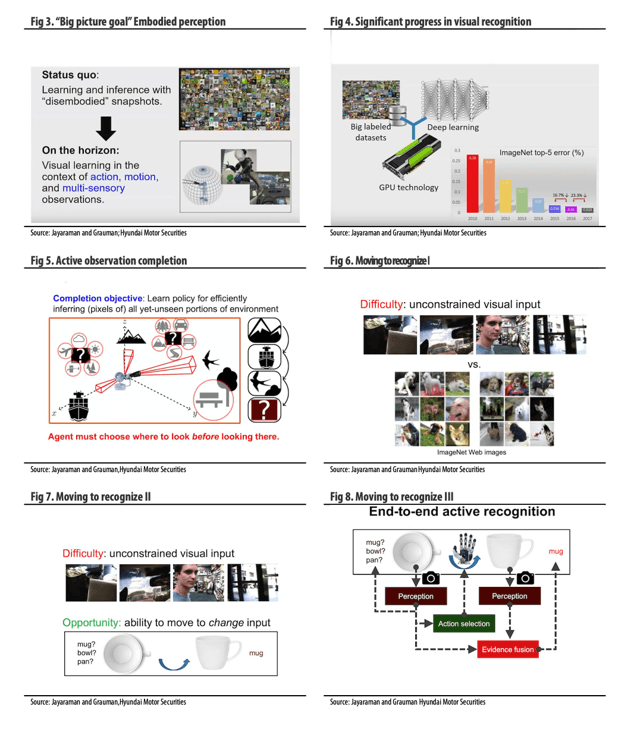

The consensus is there is a pressing need to improve machines’ capability to anticipate the unseen if we want artificial minds to take advantage of the opportunity of learning from the different perspectives of autonomous machines such as robots, autonomous drones, self-driving cars, and personal air vehicles (PAVs). To operate at their full potential, such machines must be able to independently identify and discern objects, events, and other disembodied visual cues in the physical world in order to make informed decisions.

Compared to the current state of embodied visual learning by machines, when humans, determine where to look for an object, we have the ability to quickly decide when it is important to look in an area prior to actually looking, which is an ability that some believe is due to instinct or experience. However, robotics researchers are now rapidly empowering machines with the same capabilities by utilizing sensor fusion and other techniques, so that machines can better predict what is in an unseen environment and make better decisions about what to do next. This is not unlike the way in which humans mentally complete pictures in order to determine what might see beyond an immediate field of view (FOV). Humans by nature place pixels in blank areas by using the pixels already seen to complete the picture.

One example in which actions take place in myriad ways by humans is in how we hold books. Researchers believe the best way to teach machines to do this is by what is called the ‘couch potato’ method and its variants where machines study behavior by observing actions - much in the way that Google’s Deepmind taught its system to learn how to play video games. By utilizing what is called ‘hotspot recognition’, machines can efficiently use resources by focusing on high-potential action areas. Hotspot recognition recognizes key areas that are ‘action’ points, not simply entire shapes or objects. E.g. the knob on an appliance, the button on a control panel, etc. Learning such action points enables machines to more efficiently carry out tasks.

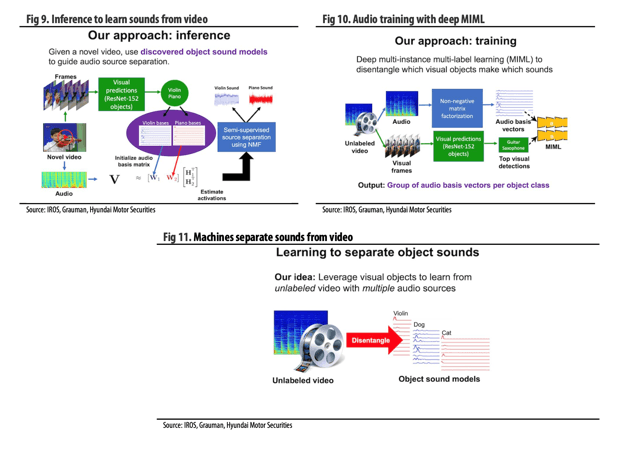

Although most research to date has been focused improving the visual capabilities of robots, there has been significant progress in the area of classifying sounds as well. Classifying sounds is also important for machines to learn and interact in the real world. The ability for machines to separate sounds is as crucial as the ability to identify and classify different images. Sound presets a greater challenge due to the mixing of various sources in a file or a track.

Present solutions include separating sounds by audio track by frequency and then classifying them. This capability helps machines to better understand and interact with their environments. One example for an AV would be the sound of a siren, is it near or far? Is it moving towards me or moving away from me? Other environmental sounds include people, other vehicles, etc. The machine must be able to quickly identify and act on the information it receives.

Views: 82

Tags:

Welcome to

THE VISUAL TEACHING NETWORK

About

© 2025 Created by Timothy Gangwer.

Powered by

![]()

You need to be a member of THE VISUAL TEACHING NETWORK to add comments!

Join THE VISUAL TEACHING NETWORK